In my previous blog post, I discussed the importance of AWS cost housekeeping by releasing unallocated Elastic IPs. Following that, I received a customer request to automate the process of stopping their AWS infrastructure during non-business hours and restarting it before their staff came online.

This request highlights the significance of cost-cutting measures during the COVID-19 pandemic, as businesses try to optimize their expenses in various areas.

ref — blog.lewislovelock.com/checks-for-unallocat..

Let's begin by analyzing the customer's AWS architecture.

The architecture includes a front-end proxy and jump-box (which also functions as a NAT gateway), an application server (both on EC2), and a back-end RDS database. The proxy is located in a public subnet, while the application server is in a private subnet.

To ensure a smooth connection to the RDS, it's crucial to start the JAVA applications running on EC2 last and stop them first. That's why there is a 15-minute gap configured between the start and stop times.

The Customer Requirement

Now we understand the architecture we are working with, we can review the customer request:

Turn off the EC2 & RDS ‘out of hours’ to reduce AWS costs

Monday — Friday — Switch off 21:00–06:00 (allowing 15 mins on each side for the RDS to be ready first)

Saturday — Sunday — Switch off

Solution

Automating the start/stop process for both an EC2 instance and an RDS database can be achieved with ease by creating a Lambda function using Python. This function can then be scheduled to run at specific times using a cron expression in EventBridge.

EC2

- First, we need to allow Lambda access to EC2 with an IAM Policy

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "arn:aws:logs:*:*:*"

},

{

"Effect": "Allow",

"Action": [

"ec2:Start*",

"ec2:Stop*"

],

"Resource": "*"

}

]

}

Create a Lambda role using the above policy

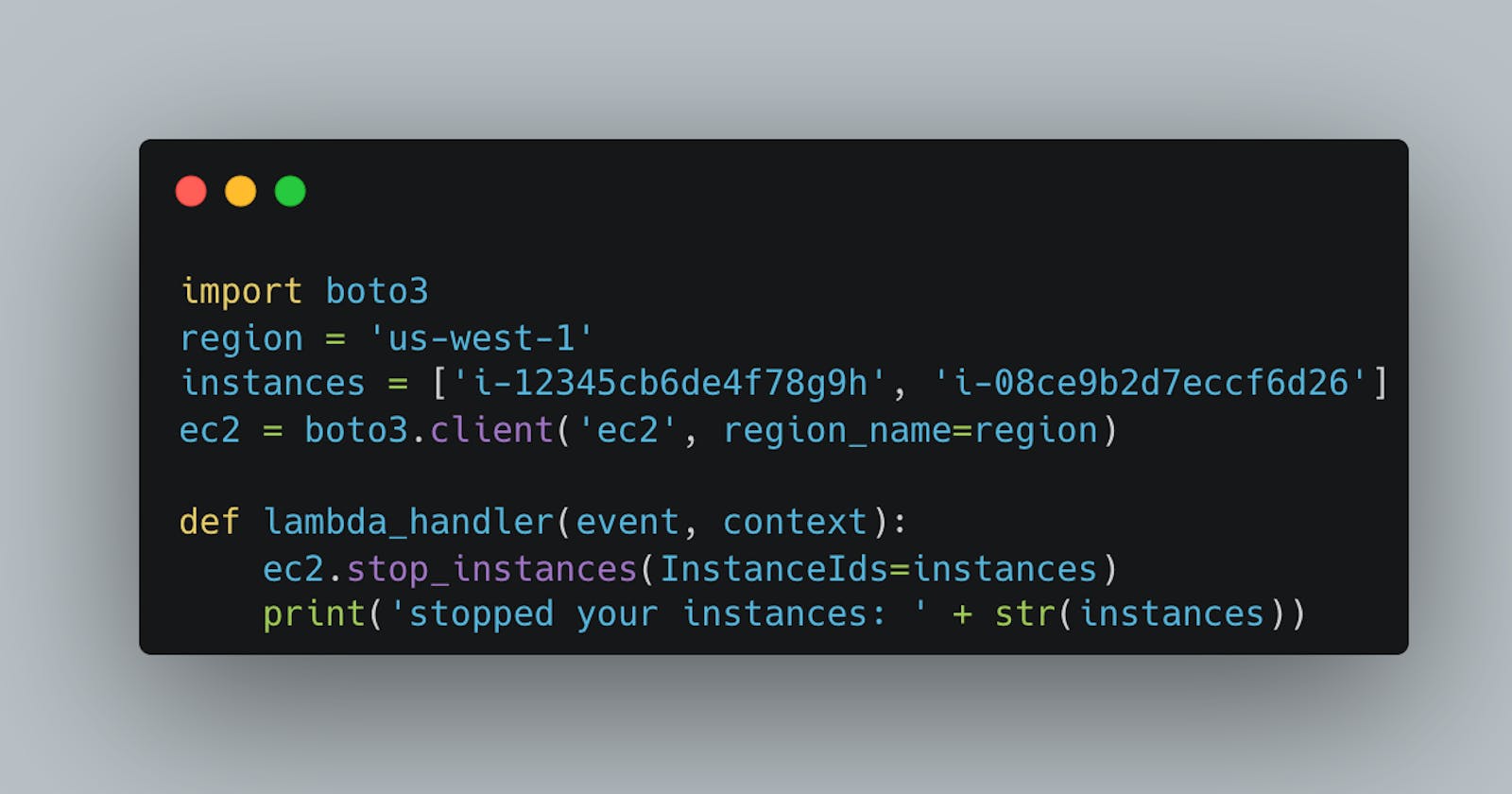

Create the lambda function to stop each EC2

import boto3

region = 'us-west-1'

instances = ['i-12345cb6de4f78g9h', 'i-08ce9b2d7eccf6d26']

ec2 = boto3.client('ec2', region_name=region)

def lambda_handler(event, context):

ec2.stop_instances(InstanceIds=instances)

print('stopped your instances: ' + str(instances))

Boto3 is the name of the Python SDK for AWS. It allows you to directly create, update, and delete AWS resources from your Python scripts

- Create a second Lambda function to stop the EC2 instances again

import boto3

region = 'us-west-1'

instances = ['i-12345cb6de4f78g9h', 'i-08ce9b2d7eccf6d26']

ec2 = boto3.client('ec2', region_name=region)

def lambda_handler(event, context):

ec2.start_instances(InstanceIds=instances)

print('started your instances: ' + str(instances))

RDS

- Create an IAM policy to allow Lambda access to RDS

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"rds:DescribeDBClusterParameters",

"rds:StartDBCluster",

"rds:StopDBCluster",

"rds:DescribeDBEngineVersions",

"rds:DescribeGlobalClusters",

"rds:DescribePendingMaintenanceActions",

"rds:DescribeDBLogFiles",

"rds:StopDBInstance",

"rds:StartDBInstance",

"rds:DescribeReservedDBInstancesOfferings",

"rds:DescribeReservedDBInstances",

"rds:ListTagsForResource",

"rds:DescribeValidDBInstanceModifications",

"rds:DescribeDBInstances",

"rds:DescribeSourceRegions",

"rds:DescribeDBClusterEndpoints",

"rds:DescribeDBClusters",

"rds:DescribeDBClusterParameterGroups",

"rds:DescribeOptionGroups"

],

"Resource": "*"

}

]

}

Create a Lambda role using the above policy

Create the lambda function to stop the RDS instance

import boto3

import os

import sys

import time

from datetime import datetime, timezone

from time import gmtime, strftime

def shut_rds_all():

region=os.environ['REGION']

key=os.environ['KEY']

value=os.environ['VALUE']

client = boto3.client('rds', region_name=region)

response = client.describe_db_instances()

v_readReplica=[]

for i in response['DBInstances']:

readReplica=i['ReadReplicaDBInstanceIdentifiers']

v_readReplica.extend(readReplica)

for i in response['DBInstances']:

#The if condition below filters aurora clusters from single instance databases as boto3 commands defer to stop the aurora clusters.

if i['Engine'] not in ['aurora-mysql','aurora-postgresql']:

#The if condition below filters Read replicas.

if i['DBInstanceIdentifier'] not in v_readReplica and len(i['ReadReplicaDBInstanceIdentifiers']) == 0:

arn=i['DBInstanceArn']

resp2=client.list_tags_for_resource(ResourceName=arn)

#check if the RDS instance is part of the Auto-Shutdown group.

if 0==len(resp2['TagList']):

print('DB Instance {0} is not part of autoshutdown'.format(i['DBInstanceIdentifier']))

else:

for tag in resp2['TagList']:

#If the tags match, then stop the instances by validating the current status.

if tag['Key']==key and tag['Value']==value:

if i['DBInstanceStatus'] == 'available':

client.stop_db_instance(DBInstanceIdentifier = i['DBInstanceIdentifier'])

print('stopping DB instance {0}'.format(i['DBInstanceIdentifier']))

elif i['DBInstanceStatus'] == 'stopped':

print('DB Instance {0} is already stopped'.format(i['DBInstanceIdentifier']))

elif i['DBInstanceStatus']=='starting':

print('DB Instance {0} is in starting state. Please stop the cluster after starting is complete'.format(i['DBInstanceIdentifier']))

elif i['DBInstanceStatus']=='stopping':

print('DB Instance {0} is already in stopping state.'.format(i['DBInstanceIdentifier']))

elif tag['Key']!=key and tag['Value']!=value:

print('DB instance {0} is not part of autoshutdown'.format(i['DBInstanceIdentifier']))

elif len(tag['Key']) == 0 or len(tag['Value']) == 0:

print('DB Instance {0} is not part of auroShutdown'.format(i['DBInstanceIdentifier']))

elif i['DBInstanceIdentifier'] in v_readReplica:

print('DB Instance {0} is a Read Replica. Cannot shutdown a Read Replica instance'.format(i['DBInstanceIdentifier']))

else:

print('DB Instance {0} has a read replica. Cannot shutdown a database with Read Replica'.format(i['DBInstanceIdentifier']))

response=client.describe_db_clusters()

for i in response['DBClusters']:

cluarn=i['DBClusterArn']

resp2=client.list_tags_for_resource(ResourceName=cluarn)

if 0==len(resp2['TagList']):

print('DB Cluster {0} is not part of autoshutdown'.format(i['DBClusterIdentifier']))

else:

for tag in resp2['TagList']:

if tag['Key']==key and tag['Value']==value:

if i['Status'] == 'available':

client.stop_db_cluster(DBClusterIdentifier=i['DBClusterIdentifier'])

print('stopping DB cluster {0}'.format(i['DBClusterIdentifier']))

elif i['Status'] == 'stopped':

print('DB Cluster {0} is already stopped'.format(i['DBClusterIdentifier']))

elif i['Status']=='starting':

print('DB Cluster {0} is in starting state. Please stop the cluster after starting is complete'.format(i['DBClusterIdentifier']))

elif i['Status']=='stopping':

print('DB Cluster {0} is already in stopping state.'.format(i['DBClusterIdentifier']))

elif tag['Key'] != key and tag['Value'] != value:

print('DB Cluster {0} is not part of autoshutdown'.format(i['DBClusterIdentifier']))

else:

print('DB Instance {0} is not part of auroShutdown'.format(i['DBClusterIdentifier']))

def lambda_handler(event, context):

shut_rds_all()

Create a lambda function to start the RDS, this time using def start_rds_all()

The RDS instance called is defined in the Environment Variable set on each instance

EventBridge (Formerly CloudWatch Rules)

Finally, I created 4 EventBridge rules to start and stop both the EC2 and RDS configured with cron expressions:

M-F 21:00 - Stop EC2 - 00 21 ? * MON-FRI *

M-F 21:15 - Stop RDS - 15 21 ? * MON-FRI *

M-F 05:45 - Start RDS - 45 05 ? * MON-FRI *

M-F 06:00 - Start EC2 – 00 06 ? * MON-FRI *